USC’s Paul Debevec‘s Role in The Matrix, Avatar, Gravity & More

Paul Debevec can rightfully claim that he has helped, in small ways and large, create some of the most technologically groundbreaking films of the last two decades. Debevec leads the graphics laboratory at the University of Southern California's Institute for Creative Technologies, and is a research professor in their computer science department. He is one of the most influential academics in the film world today.

Debevec’s research and technology have been used in The Matrix sequels, Spider-Man 2, Superman Returns, The Curious Case of Benjamin Button, Terminator: Salvation, District 9, King Kong, Hancock, The Social Network, Avatar and Gravity. He's received numerous prestigious awards for his work, including a Scientific and Engineering Academy Award in 2010 for the Light Stage, a novel image-based facial rendering system that has helped make some of the biggest films of the past decade possible.

We focus on four of the most significant game changing films on his resume—The Matrix, The Curious Case of Benjamin Button, Avatar and Gravity.

What's your origin story? How did you get involved in the filmmaking world?

I grew up a movie fan. I saw Star Wars when I was about six years old, and Back to the Future as a teenager, and I just knew that those movies were possible because of visual effects and technology. That’s how I got interested in computer graphics, and I was just very fortunate that by pursuing that at the University of Michigan and the University of California at Berkeley, that I had the chance to work, very early on, with these technologies. I got to help innovate in some areas that created some new tools for filmmakers to use. The work I was doing ended up being relevant to some projects, and the first application of stuff that I had been working on was The Matrix.

That’s quite an auspicious entrance into the film world.

The visual effects supervisor, John Gaeta, was trying to figure out how he was going to do the backgrounds of all the bullet-time shots. He saw the film that I made with a bunch of Berkeley Students, called The Campanille Movie.

The Campanile Movie was created using Façade, an image-based modeling system for creating virtual cinematography that was Debevec's Ph.D. thesis.

I had taken photos of the campus and projected the photos onto geometry-derived photogrammetry and flew around them virtually, in what I called virtual cinematography back then. Gaeta realized that was a potential solution to the problem that he had.

And what was that problem?

They knew they were going to do the time slice technique, where they put a bunch of cameras in a row and fire them off at the same time. This means that the camera is effectively moving far faster than it typically does and time seems either soft or slowed down. The inventor of the time slice technique is Tim MacMillan.

Tim had actually worked on a film called Wing Commander, which had what later would be renamed ‘bullet-time,’ it was originally time-slice or the frozen moment effect.

So how did your research with virtual cinematography help The Matrix team turn time-slice into bullet-time?

Well, The Matrix folks had to find a way to really dial it up to eleven and transcend anything people had seen before. Their thought was, ‘You know what, we’re not just going to do a little bit of a move from right to left, we’re going to go all the way around the characters, three hundred and sixty degrees. And we’re going to add in all these other really cool effects, too, like bullets and smoke trails.’ What they realized is the medium itself causes a problem, because once you’re going three sixty around Keanu Reeves, your camera array is looking back on itself. So you have a really complicated setup that, practically speaking, you’re not actually going to be able to stick on top of the building where they wanted to shoot it, where Keanu’s leaning back and dodging bullets.

So they realized they have to shoot Keanu in the studio in a controlled setup with all the Canon A2E cameras around him, and that they’d have to somehow reconstruct a camera move around the studio that would be completely consistent with how they laid out their camera array at the location. The best way they could think to do that was going to shoot the location for real, before the bullet-time shot and right after it, and figure out how to create a photo-real version of it that they could computer render in exactly the same camera path, so it all locks together. When John Gaeta saw my movie, where I took pictures of the Berkeley tower and the environment and then reconstructed it in 3D, and then flew around it pretty realistically, and he said ‘that’s how we’re going to do it.’

Let’s move next to your work with David Fincher on The Curious Case of Benjamin Button

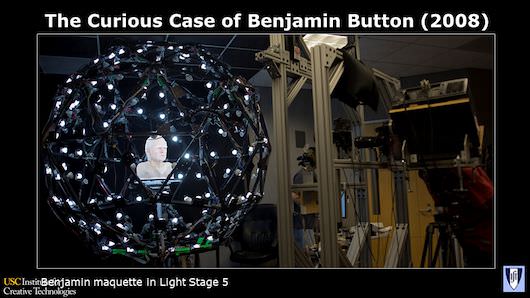

The Curious Case of Benjamin Button is really significant. Ever since I started seriously researching computer graphics, the whole idea of creating a photo-real digital human character in a movie, or in anything, was kind of this Holy Grail of computer graphics. I remember having legitimate conversations with other researchers in the field, in 1999 and 2000, and we didn’t know if we’d ever create a photo-real human face. There was just some sort of untouchable liveliness and quality about the face. We could render things that aren’t alive, but rendering a human face? We thought there’s no way we’ll get there.

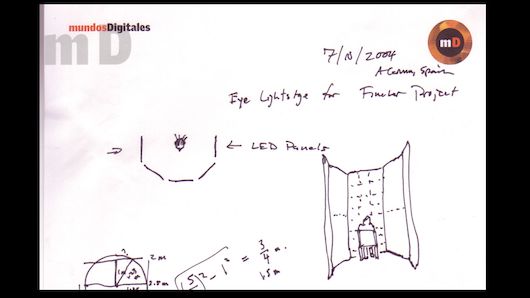

The first time I heard about the Benjamin Button project was in 2004, and Digital Domain, the digital effects team that worked on the film, had been following my work. They brought David Fincher by. And Fincher was thinking that is was going to be the quality of the eyes that mattered most. The performance of an actor on a movie screen is all about the eyes, and the subtleties of how they dart around, how the little twitches of the skin around the eyes indicate that the character is thinking about something, or feeling something. And Fincher knew he couldn’t get the face to look right just using old age makeup, but at the same time, he didn’t think he’d be able to get the face looking correct solely with computer graphics. He had this hybrid idea, where they would do the computer graphics for most of the face except for the eyeballs and the area of skin around the eyes, and those would be filmed for real and they’d put it all together.

And here’s where your novel lighting techniques come in?

Well the problem was, if we’re going to film Brad Pitt’s real eyes and composite them into a digital human head, then how do you get all the lighting to be consistent on that? How do we light Brad Pitt’s eyes so that they were consistent with the digital character you’re creating?

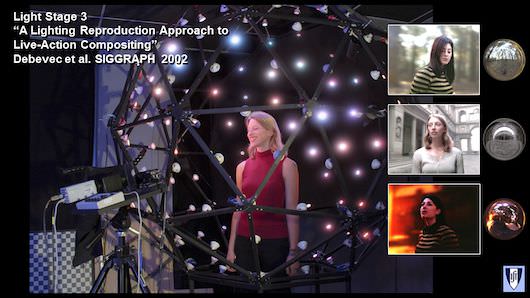

Fincher and Digital Domain were aware of some work that I published in 2002 on one of our light stage systems. And this was the version that we called the lighting reproduction approach, where what you do is surrounded the actor with color LEDs, and if you know what the light is like in the scene that you want to put the actor in, there’s ways of grabbing that.

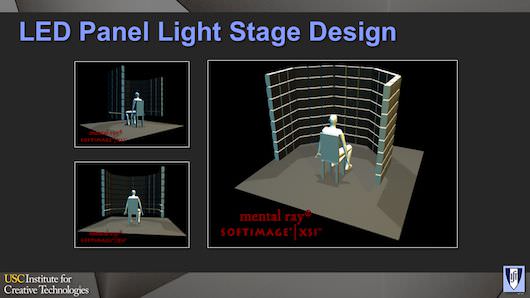

The device that we built in 2002 only had 156 points of light. That’s enough resolution to light human skin, which isn’t that shiny, but when you’re talking about lighting eyes, it’s going to be too discrete. So I suggested that we should get a bunch of LED panels and build a lighting reproduction system out of them. Digital Domain thought it sounded pretty interesting, so I designed these panels to be like a cube surrounding the actor. We played back some lighting environments on people, I took pictures of their faces and their eyes, and I realized that with the current technology with these LED panels, we could recreate light on people’s eyes and the reflections will look perfectly smooth and it will all be good.

Yet they ended up doing exactly what you had once thought impossible—creating a completely CG human face.

Yes, but they still had to accurately match the illumination of the head in all these scenes where they replaced the body actor’s head with the old Brad Pitt head. For that they did a couple of things that leveraged our technology, one of which was they brought the sculpture of Brad Pitt's head as a 70 year old man, done by Rick Baker’s makeup studio, over to our lab, and we put it in our light stage and exhaustively digitized it’s 3D geometry. We gave them a digital record of everything that happens with this ultra realistic digital sculpture of Brad Pitt’s head that they could then very quickly compute any lighting on it. Whether they’re talking at the table, or he’s in the bathtub, or he’s having a conversation in the saloon, they recorded the light using my technique.

And these techniques ended up winning a few awards…

The Benjamin Button team got a very well deserved Academy Award for the visual effects, and we got a Scientific and Engineering Academy Award for the light stage work. And for those Scientific and Engineering Awards, what they like to do is see that the technology had a somewhat sustained impact over a bunch of films. So from Spider-Man 2 to Superman Returns to King Kong and Hancock and then finally The Curious Case of Benjamin Button it was like, ‘Okay this thing actually made a difference in the industry.’

Let’s talk about Avatar, which really raised the technological bar.

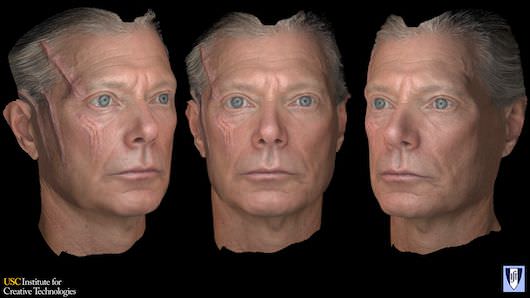

Starting in 2005 and 2006 I developed a new light stage process where we polarize all of the lights and take pictures of them with digital still cameras. And these blurry lighting directions come from different principal directions, vertical, horizontal, and front to back. I found that if we do this, and do some computations on the resulting images, we can not only acquire images of how the space responds to light from all different directions, but we can actually also acquire a three dimensional model of the geometry of these surface spaces that is accurate to a tenth of a millimeter.

And what does this allow a filmmaker to do?

My interest here was that I wanted to create, with photos and lighting only, a way of getting a really super accurate model of someone’s face, down to fine creases. Previously, the only thing you could do was do a plaster cast, where you goop them up with the alginate and then pour plaster in the mold, and then you send that off to Canada to get super high resolution scans. That’s a great process, but you can’t get a bunch of different facial expressions for it, because you have to keep your eyes and mouth closed or it’ll fill up with the alginate.

I showed some people working on Avatar the process we had come up with, and they were very excited about the kind of detail we could pick up with faces. Within just a couple weeks, we had the actors from Avatar, Zoë Saldana and Sam Worthington, come to our lab to get facial scans with us. Eventually we got Stephen Lang, Joel David Moore, and Sigourney Weaver in for facial scans as well. And all of the data of their faces went down to our friends at Weta Digital in New Zealand and became part of the basis of sculpting their digital stunt doubles, as well as the Na’vi Avatar versions of them.

The actors Na’vi counterparts do retain characteristics of the actual actor’s faces…

Yeah, the way that they built the Na’vi characters is that they’re going to be driven by the motion capture data of the real actors, they wanted to have a very strong facial correspondence between the Na’vi versions of them and the real actors. If you look at the face of Neytiri, every thing from the cheekbones down to how the face it’s shaped, how it moves, and how it’s textured—it’s very, very closely based on the scans of Zoë Saldana. So that’s something that we helped with in Avatar. We also scanned a ton of plants for the jungle for Avatar. They brought over what seemed like half the garden department of Home Depot to our laboratory for us to get those leaf and plant textures.

How did this work lead you to Gravity?

So David Fincher didn’t use our lighting reproduction for Benjamin Button, but he had a movie that he made right after called The Social Network where he did end up using that approach, and he ended up being one of the advisors on Gravity. So Fincher helped bring this technique to the attention of the filmmakers. I was approached by a visual effects supervisor friend of mine in early 2010 named Chris Watts, who had been brought on by Warner Brothers early on, and he thought of our light stage.

So the issue was getting the lighting right, considering so much of the environment the actors interacted with was purely digital?

Yeah, to light the actors with the light of virtual environments, Chris knew that we had to composite the actors into these scenes and have it be totally believable. As Sandra Bullock moves through the environment, there has to be the right interaction of light.

We knew we had a big light stage here in Playa Vista that has eight-meter diameter, and that it would be big enough, but what I ended telling Chris is that we should test something even beyond playing the light back on the actors, and this became comprehensive facial performance capturing.

So you actually suggested doing something even more technologically advanced then they were thinking of?

I thought we should try a more advanced light stage approach where we’re actually going to give you post production control of the viewpoint it was shot from, and the lighting that was on the actor. You shoot high speed cameras and rapidly change the light from one lighting condition to the next, and the next, and the next, then you have enough information from all these different lighting conditions, at every twenty fourth of a second, that you can compute an image of the face lit by completely different light after the fact. You can actually render out a different view of the face from a novel position.

But this proved to be a little beyond what was possible for this film, which was already inventing a novel approach to production as they went along?

So we shot that on March 31st, 2010, and they brought in Alfonso Cuarón, and he was dazzled by the light stage technology and thought it was really cool. We had five high-speed cameras set up filming higher than hi-definition. Tim Weber from Framestore, the visual effects company that worked on Gravity, was also there, and we threw in a few lighting conditions, like the Earth lighting our actor from different positions, as validation to show we can compute what she looks like in this lighting condition. The test was totally successful, but what ended up happening is they realized they’d need this technique to shoot way more of the film then they had anticipated, and shooting with multiple high-speed cameras, just the amount of data that generated, and the other implications it had for the production work flow, it was going to be too much—but I hope to explore that in a future movie.

So what did Cuarón and his team end up using?

They used our lighting reproduction approach of literally surrounding the actor with computer controlled illumination and rendering out from their computer graphics model a panoramic image of what the light is like on the actors face when they’re in the spacesuit, and then playing that back on LEDs surrounding the actor, which is pretty close to the set up that we proposed to David Fincher for Benjamin Button.

They set that up, and, they had a computer generating light becoming real light through the LEDs and shot that back onto the actor’s faces. Then they put the actor’s faces in the computer generated environment so that, as the actor is spinning around, the light spins around them.

They completely had to invent a realistic practical production work flow around this quite novel technology set-up, in terms of feature film production, and as we both know the results were pretty wonderful and the movie’s done quite well. It was really exciting to see stuff go from the lab into the film.

Featured image: In 2008, visual effects company Digital Domain used detailed reflectance information captured with ICT's Light Stage 5 system to help create a computer-generated version of Brad Pitt as an old man for David Fincher's 'The Curious Case of Benjamin Button.' Courtesy Paul Debevec